Indoor autonomous flight with ArduCopter, ROS and AprilTag Detection¶

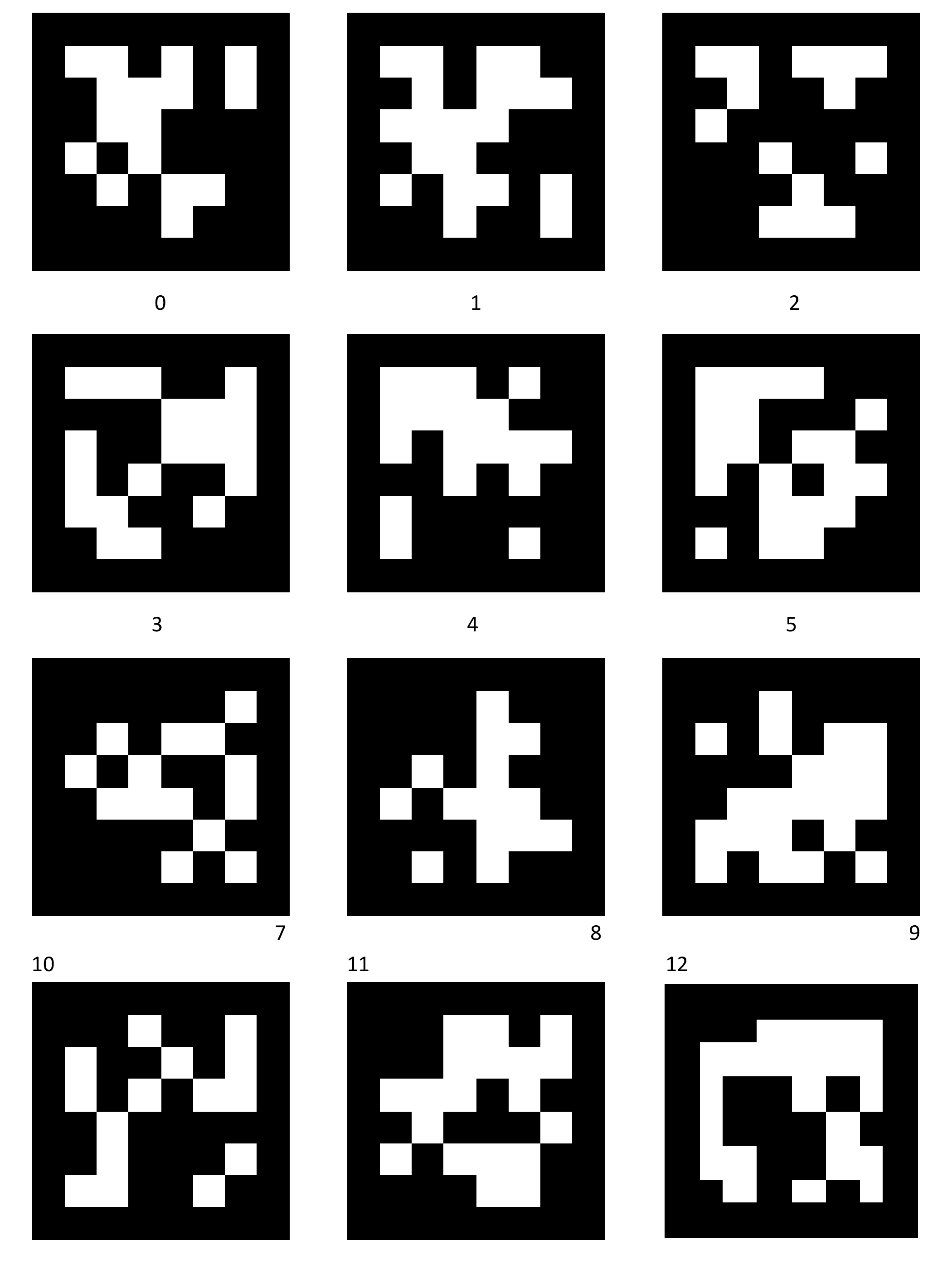

This wiki page describes how to setup a system capable to realize indoor autonomous flight. The system is based on a quadcopter with a Raspberry Pi 3 and a USB camera. Images from the camera are used to calculate poses estimation on the Raspberry Pi and the result are sent as MAVLink messages to the Flight Controller. The camera is downward looking and on the floor there is an AprilTag board like this:

System overview¶

The system uses ROS for all the tasks it has to do. The images from a USB camera module are captured by usb_cam node, the pose estimations are calculated by apriltag_ros node, then processed by vision_to_mavros node, and the relevant messages are sent to the Flight Controller using MAVROS. All of these ROS packages runs on the Raspberry Pi 3.

The usb_cam node publishes camera/image and camera/camera_info topics, the apriltag_ros node subscribes to these topics and publish a camera_pose message to the mavros/vision_pose/pose topic, mavros translates ROS messages in MAVLink messages and send it to the Flight Controller.

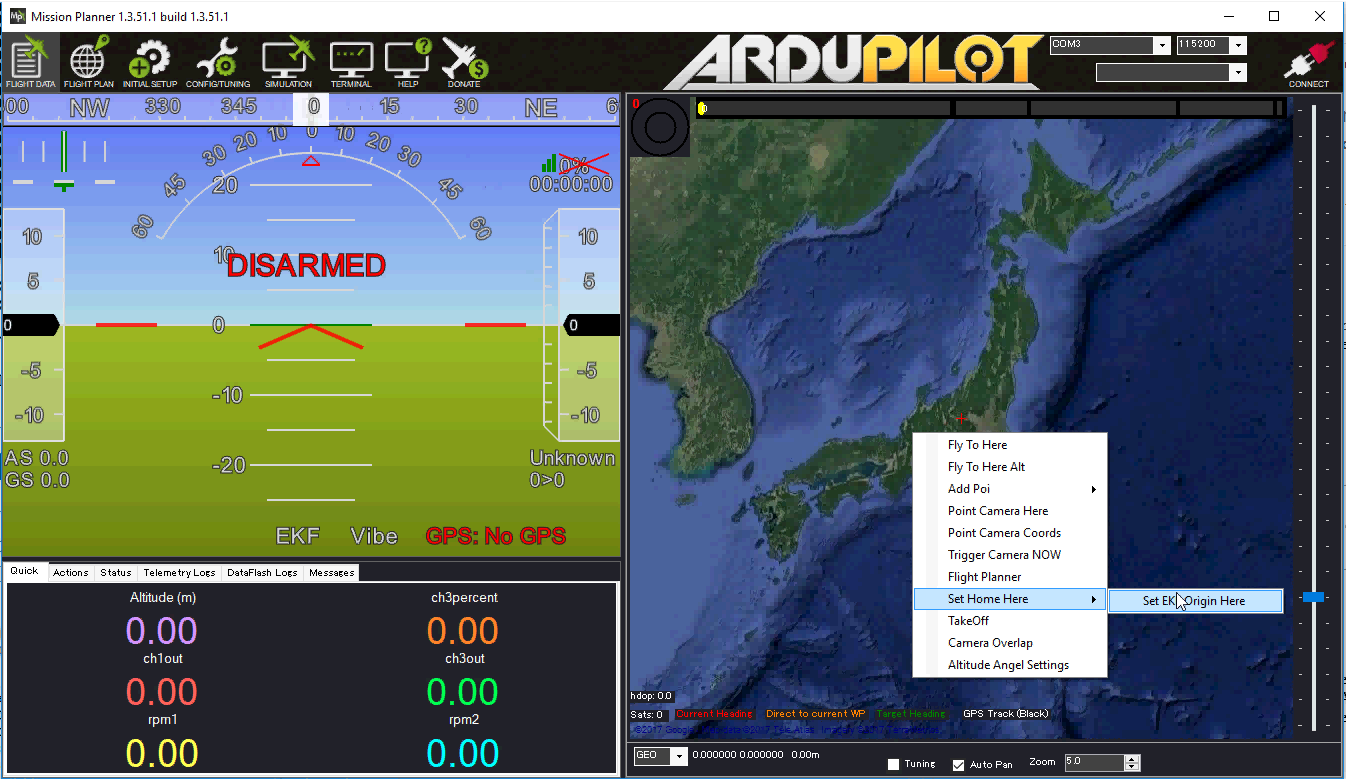

The messages SET_GPS_GLOBAL_ORIGIN and a SET_HOME_POSITION are sent before the system is ready to run. Two methods of setting EKF home:

with Mission Planner (Right Click > Set EKF Home > Set Origin)

with the script set_origin.py.

The Flight Controller and the Raspberry Pi 3 on the quadcopter are connected via serial port whereas the Raspberry Pi 3 and the desktop PC are connected via WiFi. The desktop PC is used only for configuration and visualization purposes. RViz is used for visualization on PC in ROS.

Components of the system¶

A small quadcopter equipped with Pixhack Autopilot, CUAV PX4FLOW, TeraRanger One. With this setup, you can follow the wiki to achieve non-GPS flight with PX4FLOW.

Raspberry Pi 3 Model B with a 16GB micro SD card running Ubuntu Mate 16.04, connected to a USB camera down-facing to the ground, with the x-axis of the camera to the right.

Note

There is no hard restriction on the mounting orientation of the camera beside it being down-facing, but frame transformation is needed to align the frames for different camera orientation.

Software setup¶

Install ROS driver for your camera:

For USB camera:

sudo apt-get install ros-kinetic-usb-camFor RPi camera module: you can follow this instruction to enable the camera, then install raspicam_node.

Install Apriltag library, Apriltag ROS wrapper and vision_to_mavros packages.

# Install Apriltag library

cd

git clone https://github.com/AprilRobotics/apriltag.git

cd apriltag

cmake .

sudo make install

# Install Apriltag ROS wrapper

cd ~/catkin_ws/src

git clone https://github.com/hoangthien94/apriltags2_ros.git

git clone https://github.com/hoangthien94/vision_to_mavros.git

cd ../

catkin_make

Camera calibration¶

Follow the ROS wiki to calibrate and obtain the calibration file for your camera.

Replace the

camera_info_url paramin the gsoc.launch file with the correct path to the calibration file.

Configure ArduPilot¶

Connect to the flight controller with a ground station (i.e. Mission Planner) and check that the following parameters are set as shown below:

AHRS_EKF_TYPE 2

BRD_RTC_TYPES 2

EKF2_ENABLE 1

EKF3_ENABLE 0

EK2_GPS_TYPE 3

EK2_POSNE_M_NSE 0.1

EK2_VELD_M_NSE 0.1

EK2_VELNE_M_NSE 0.1

EK2_EXTNAV_DELAY 80

GPS_TYPE 0

COMPASS_USE 0

COMPASS_USE2 0

COMPASS_USE3 0

SERIAL1_BAUD 921 (the serial port used to connect to Raspberry Pi)

SERIAL1_PROTOCOL 2

SYSID_MYGCS 1 (to accept control from mavros)

Instruction to reproduce the system¶

1. Running all the nodes¶

First, let’s test each ROS node separately and fix any problems that arise:

usb_camnode:On RPi:

roslaunch usb_cam usb_cam-test.launchIf RPi is not connected to a display, view the raw image on PC with Linux Ubuntu:

export ROS_MATER_URI=http://<rpi-ip>:11311 && rqt_image_viewVerify that there are images coming from the camera.

MAVROSnode:On RPi:

roslaunch mavros apm.launch fcu_url:=<tty-port>:<baud-rate>Verify that MAVROS is running OK. For example,

rostopic echo /mavros/stateshould show that FCU is “CONNECTED”.

Apriltag nodeandvision_to_mavrosnode:Make sure the

camera_info_urlpoints to the correct path to your camera’s calibration file.On RPi:

roslaunch vision_to_mavros apriltags_to_mavros.launchOpen up RViz and view

/tfand/mavros/vision_pose/posetopics. With/tf, you should see the camera pose in the tag frame, with z-axis pointing downwards. If your camera’s x-axis is pointing to the right, then/mavros/vision_pose/posewill be aligned with body frame. If the camera’s x-axis is pointing in a different direction, you need to modify the params ofvision_to_mavrosaccordingly.

2. Ground test¶

If each node can run successfully, you can perform ground test:

On RPi: launch all the nodes as described above. View the topic

/mavros/vision_pose/poseon RViz. Move the vehicle around and see if the pose changes according to the movement.Set EKF home by sending the MAVLink messages SET_GPS_GLOBAL_ORIGIN and SET_HOME_POSITION.

Using Mission Planner: Right-click on any point on the map >

Set Home Here>Set EKF Origin Here.

Using code: you can use this Python script set_origin.py.

Install

pymavlink: Follow the instructions here.Run the script:

rosrun vision_to_mavros set_origin.py.

After the origin of the EKF is set, a quadcopter icon will appear on the map.

Hold the vehicle up, move around while keeping the tag board in the field of view of the camera, and observe the trajectory of the vehicle on Mission Planner.

If the last step is successful, you can go ahead with flight test.

3. Flight test¶

Takeoff in Stabilize to check whether the quadcopter is stable.

At a height that the camera can have a good view of the tags, switch to Alt-Hold to adjust level position. Observe the feedback on RViz as well as Mission Planner to see if tags are detected.

Take a look at Mission Planner map, confirm that the system is still tracking.

Switch to Loiter, but always ready to switch back to Alt-Hold if anything goes awry.

Otherwise, the quadcopter should hover stably above the tags.

Note

For external navigation data to be accepted by EKF, the data rate needs to be higher than a certain threshold (usually 10Hz). If you are also using RPi, firstly we need to tune the params related to computational costs to achieve an acceptable detection rate, either by increasing tag_decimate, which will increase detection rate at the expense of lower accuracy, or increase tag_threads, if you are not running anything else and have some CPU to spare. The related tuning parameters are located in the file settings.yaml.

Some Useful links¶

Original blog post.

Indoor autonomous flight with ArduCopter, ROS and Aruco Boards - wiki page and blog post.

Indoor Autonomous Flight with Cube, ROS and Aruco Board - blog post.

Vision Positioning Experiments using SKYVIPER - blog post.

Experiment with Visual Odometry - ROVIO, blog posts part 1 and part 2.

Indoor flight with external navigation data - blog post.